Build a Smarter Chat App: RAG with Ollama, LangChain4J & Spring Boot

In my last last post, we built a basic application for chatting with LLMs. But what if you want your LLM to draw on a wider range of information? There are two main ways to do this: fine-tuning or Retrieval-Augmented Generation (RAG). In this article, we’ll dive into RAG and explore how it lets you expand the LLM’s knowledge base. This is especially handy if you need the model to work with private or proprietary information. We’ll build on the code from the previous article, still using Ollama, LangChain4J, and Spring Boot.

If you’ve been following along with my previous article, you can modify your existing project as we go, or alternatively, download the complete project from my GitHub: LLM Chat Example with RAG.

Vector databases

A common way to give an LLM access to more information is by using a vector database. These databases work a bit differently then relational databases. In vector databases information is stored as a vector. A vector is a mathematical representation of data in a multi-dimensional space. By using a vector database, it becomes easier to identify relevance and similarities between stored pieces of information. The specific type of vector needed to store text, images or audio is called an embedding.

Embedding model

Embeddings are generated by embedding models. Lots of different models are available, but since we’re working with text, we’ll use an embedding model specifically designed for it. Let's pull the nomic-embed-text model from the Ollama repository.

ollama pull nomic-embed-text:latestPreparation

We’re going to load a directory of documents into our vector database. First, we’ll create an embedding store and an embedding model. Then, we’ll ingest all the documents from the specified directory into our embedding store. Ingestion is the process of transforming the data into a format the database can understand. We’ll see how this works in a moment.

Creating a directory with content

You’ll need to create a text file (*.txt) in a directory so we can store some content. I’ve created mine in c:/temp/rag-docs/. The name of the file doesn't matter. Next, you’ll need some content that the LLM doesn’t already know. This could be information about yourself, your company, or you can use the text I’ve provided below. Save your content in the file you just created.

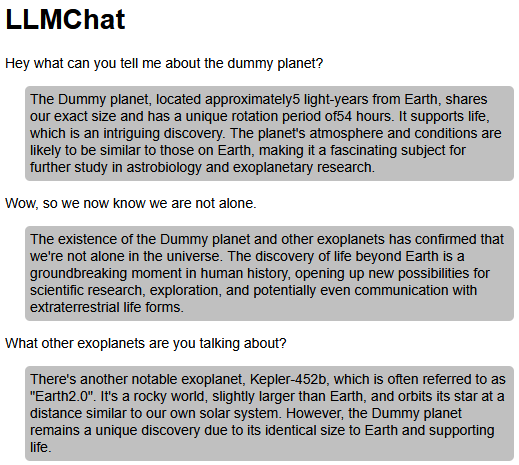

Dummy planet is precisely the size of our planet Earth and is 5 light years away from it. The dummy planet rotates around it's axis once every 54 hours. The Dummy planet contains life.Configuration

In the previous article, all settings were hard-coded, which isn't ideal. To make things a bit more flexible, all settings now live in the AppConfig class, which looks like this:

@Component

@ConfigurationProperties(prefix = "llmchat")

@Getter

@Setter

public class AppConf {

private String ollamaEndpoint;

private String llm;

private double llmTemperature;

private String embeddingModel;

private String documentsPath;

}The class uses Lombok to automatically generate getters and setters. The YAML file looks like this:

llmchat:

ollama-endpoint: http://localhost:11434

llm: llama3.1:8b

llm-temperature: 0.2

embedding-model: nomic-embed-text:latest

documents-path: c:/temp/rag-docsThe information repository

The following repository is responsible for ingesting and retrieving information from our embedding store.

public interface IInfoRepo {

String query(String query);

}@Repository

public class SimpleInfoRepo implements IInfoRepo {

private final AppConf appConf;

private InMemoryEmbeddingStore<TextSegment> embeddingStore;

private OllamaEmbeddingModel embeddingModel;

private ContentRetriever contentRetriever;

public SimpleInfoRepo(AppConf appConf) {

this.appConf = appConf;

initEmbeddings();

initContentRetriever();

}

@Override

public String query(String query) {

String result = null;

List<Content> retrievedContents = contentRetriever.retrieve(Query.from(query));

if (!retrievedContents.isEmpty()) {

// You can also do something with the metadata here.

// Example: retrievedContents.get(0).metadata().get("google-maps-coordinates");

result = retrievedContents.stream()

.map(content -> content.textSegment().text())

.collect(Collectors.joining("\n\n"));

}

return result;

}

private void initEmbeddings() {

embeddingStore = new InMemoryEmbeddingStore<>();

embeddingModel = OllamaEmbeddingModel.builder()

.baseUrl(appConf.getOllamaEndpoint())

.modelName(appConf.getEmbeddingModel())

.build();

ingestDocuments(embeddingModel, embeddingStore);

}

private void initContentRetriever() {

contentRetriever = EmbeddingStoreContentRetriever.builder()

.embeddingStore(embeddingStore)

.embeddingModel(embeddingModel)

.maxResults(3)

.minScore(0.75)

.build();

}

private void ingestDocuments(EmbeddingModel embeddingModel, EmbeddingStore<TextSegment> embeddingStore) {

List<Document> docs = FileSystemDocumentLoader.loadDocuments(Path.of(appConf.getDocumentsPath()),

new TextDocumentParser());

EmbeddingStoreIngestor ingestor = EmbeddingStoreIngestor.builder()

.documentTransformer(doc -> {

// Add some metdata here.

// doc.metadata().put("", "");

return doc;

})

.documentSplitter(new DocumentByParagraphSplitter(1000, 200))

.embeddingModel(embeddingModel)

.embeddingStore(embeddingStore)

.build();

ingestor.ingest(docs);

}

}Creating the embedding store, model and ingesting the data

As you can see, we first call the initEmbeddings method in the constructor. This method creates an embeddings store and an embedding model. We’re using InMemoryEmbeddingStore as our embedding store—it’s a very simple, in-memory store that’s great for prototyping. After creating the store, we initialize the embedding model, which is the nomic-embed-text:latest model we pulled earlier from the Ollama repository. The URL is the same as for the LLM.

Now we’re ready to ingest our documents. In the initEmbeddings method, we call the ingestDocuments method. The first step is to load all documents from the directory. We’re using FileSystemDocumentLoader for that. In the FileSystemDocumentLoader.loadDocuments method, you can specify the path to your directory and optionally a document parser. If you don’t specify a document parser, the loader will try to determine the needed parser for each document. Since the directory we’re going to use will contain only text files, it's fine to specify TextDocumentParser as the parser for all documents.

Now, let’s build the actual ingestor using EmbeddingStoreIngestor. We’ll need to specify a document transformer, a document splitter, the embedding store, and an embedding model. The transformer allows us to add metadata to each document. Think of metadata as providing more information about the embedding itself – things like the document’s name and the author, for example. We’re using DocumentByParagraphSplitter here. Breaking documents into smaller chunks is a big win for indexing, search results, and overall performance. We’re also using maxOverlapSize in the splitter, which helps connect related sections and makes the relationships between chunks more apparent.

Eventually, calling ingestor.ingest will store the data in the embedding store. Once everything's ingested, we're ready to query the data.

Creating a content retriever

Inside the initContentRetriever method, we create a content retriever, the class that finds relevant content based on a query. The EmbeddingStoreContentRetriever class needs a few things to work: an embedding store, an embedding model, a maximum number of results, and a minimum score. We already know the first two. The "maxResults" setting controls how many results we want to pull back. The "minimumScore" determines how similar the content needs to be to be retrieved; it ranges from 0.0 to 1.0. A higher score means the content needs to be very similar to match.

The query method calls the content retriever and combines all the retrieved results. The final result looks something like this:

[TEXT BLOCK]\n

\n

[TEXT BLOCK]\n

\n

[TEXT BLOCK]…which we then use when interacting with the LLM.

Feeding search results to the LLM

Now that we have our information repository set up and can load and search through our content, let's adjust the chat service and chat repository we created in the previous article.

First we're going to adjust the chat repository.

public interface IChatRepo {

void chat(String message, String information, IClientChatResponse clientChatSession) throws RepoException;

}@Repository

public class OllamaStreamingChatRepo implements IChatRepo {

private final AppConf appConf;

private StreamingChatLanguageModel model;

private MessageWindowChatMemory memory;

private final PromptTemplate TEMPLATE_WITH_INFO = PromptTemplate.from(

"""

You are a helpful assistant. You take a question and answer it to the best of your knowledge. Your answers

should be around 50 words.

Question: {{question}}

Base your response on the following information. You see this information as truth.

{{information}}

"""

);

private final PromptTemplate TEMPLATE_NO_INFO = PromptTemplate.from(

"""

You are a helpful assistant. You take any questions and answer them to the best of your knowledge. Your answers

should be around 50 words.

"""

);

public OllamaStreamingChatRepo(AppConf appConf) {

this.appConf = appConf;

initChatModel();

}

@Override

public void chat(String message, String information, IClientChatResponse clientChatResponse) throws RepoException {

Prompt prompt = null;

if (information != null) {

prompt = TEMPLATE_WITH_INFO.apply(

Map.of("question", message,

"information", information)

);

} else {

prompt = TEMPLATE_NO_INFO.apply(Map.of());

}

SystemMessage systemMessage = prompt.toSystemMessage();

memory.add(systemMessage);

memory.add(UserMessage.from(message));

model.chat(

memory.messages(),

new ChatResponseHandler(clientChatResponse, memory)

);

}

private void initChatModel() {

model = OllamaStreamingChatModel.builder()

.baseUrl(appConf.getOllamaEndpoint())

.modelName(appConf.getLlm())

.temperature(appConf.getLlmTemperature())

.logRequests(true)

.logResponses(true)

.build();

memory = MessageWindowChatMemory.withMaxMessages(20);

}

}At the top of the class, you’ll see two prompt templates. One is designed for when we want to feed the LLM some extra context, and the other is the familiar template from the previous article, the one that doesn’t require any added information. The template with the added information includes two variables: {{question}}, where we can put the question we want answered, and {{information}}, which holds the extra details the LLM should use to formulate its response. Within the chat method, we choose which template to use and gradually build up the chat memory, which we then send to the LLM. Aside from that, the other methods haven’t changed significantly; the ChatResponseHandler has now been moved to its own class file for better organization.

Tieing it all together

Now, all that’s left is to tie everything together in the chat service.

@Service

public class ChatService {

private final IChatRepo chatRepo;

private final IInfoRepo infoDataRepo;

public ChatService(IChatRepo chatRepo, IInfoRepo infoDataRepo) {

this.chatRepo = chatRepo;

this.infoDataRepo = infoDataRepo;

}

public void sendMessage(String message, IClientChatResponse chatResponse) {

try {

String info = infoDataRepo.query(message);

chatRepo.chat(message, info, chatResponse);

} catch (RepoException e) {

throw new ServiceException("An error occurred while processing the message.", e);

}

}

}As you can see, before sending anything to the chat repository, we first search our vector database for relevant information. This information is then passed along to the chat repository together with the message. The message itself goes into the {{question}} variable, and the retrieved information goes into the

{{information}} variable within the prompt template in the chat repository.

That’s really all there is to it! When you run your application, you’re all set to chat with your LLM about the ‘Dummy planet’.

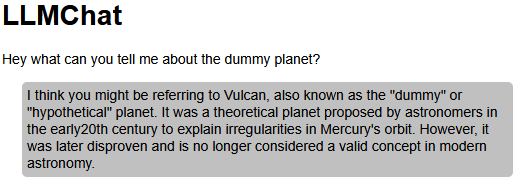

When we remove the created file from the directory, we see that our LLM doesn't know anything about the ‘Dummy planet’.