Build a Chat App with Ollama, LangChain4J & Spring Boot

Published on: 2025-04-01

In a previous article, we explored how to run LLMs locally. Now, let’s do something fun with them! This time, we’ll build our own chat app using Ollama, LangChain4J, and Spring Boot.

What to expect

Here’s what we’ll be building: a relatively simple Spring Boot application with a user interface connected via WebSockets to a chat service. The chat service will stream the LLM’s response to you – meaning you’ll see the response in chunks, instead of waiting for the entire thing to finish. Plus, we’ll keep some context in memory, so the LLM can ‘remember’ what we’ve already talked about.

Dependencies

Ollama

Download Ollama from https://ollama.com/ and install it. After installation run the following command to install the necessary LLM:

ollama pull llama3.1:8bThis will install llama3.1 which supports 8 billion parameters. After installation run the following command and make sure llama3.1:8b is present in the list.

ollama listSpring Boot

The easiest way to kick things off is using Spring Boot’s Initializr website. You can pick and choose the dependencies you need and download a basic application structure. This project profile should get you up and running pretty quickly. Alternatively, if you just want to grab everything at once, you can find the project on my GitHub: LLM Chat Example.

If you’ll prefer not to use the Initializr website, you’ll need these Spring dependencies:

- Spring Web

- Spring WebSocket

LangChain4J

To get everything running, you’ll also need these LangChain4J dependencies. There’s even a Spring Starter dependency for LangChain4J that can automatically create chat models for you, which you could then inject into your classes. But for this tutorial, we’re going to set everything up ourselves, so we won’t need that Spring Starter dependency.

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j</artifactId>

<version>1.0.0-beta2</version>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-ollama</artifactId>

<version>1.0.0-beta2</version>

</dependency>The index.html file

This index.html file contains the chat UI and the client-side WebSocket code to communicate with the backend. In the line where you create a new WebSocket, be sure to adjust your endpoint if you decide to change it. Place this file in src/main/java/resources/static. Once you run your Spring Boot app, it should be the first page you see.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>LLMChat</title>

<style>

body {

font-family: Arial;

font-size: 14px;

}

label { display: block; }

textarea#history {

width: 500px;

height: 350px;

}

textarea#message {

width: 500px;

height: 150px;

}

.message-system {

margin-left: 20px;

background-color: #c0c0c0;

padding: 5px;

border-radius: 5px;

}

</style>

</head>

<body>

<h1>LLMChat</h1>

<main>

<div class="chat">

<div class="message-wrapper">

<div id="history">

</div>

<div>

<label for="message">Message:</label>

<textarea id="message"></textarea>

</div>

<input type="button" id="send" value="Send">

</div>

</div>

</main>

<script>

(function() {

let socket;

let historyElem;

let messageText;

let sendButton;

let currentSystemMessageElem;

function addUserMessage(message) {

const elem = document.createElement('p')

elem.classList.add('message-user');

elem.innerText = message;

historyElem.appendChild(elem);

return elem;

}

function appendSystemMessage(message) {

if (!currentSystemMessageElem) {

currentSystemMessageElem = document.createElement('p');

currentSystemMessageElem.classList.add('message-system');

historyElem.appendChild(currentSystemMessageElem);

}

currentSystemMessageElem.innerText += message;

}

async function send() {

disableChat(true);

const message = messageText.value;

addUserMessage(message);

messageText.value = '';

socket.send(message);

}

function disableChat(value) {

messageText.disabled = value;

sendButton.disabled = value;

}

function initElems() {

historyElem = document.querySelector('#history');

messageText = document.querySelector('#message');

sendButton = document.querySelector('#send');

sendButton.addEventListener('click', e => send());

}

function initWs() {

// TODO Change the endpoint if necessary.

socket = new WebSocket('ws://localhost:8081/ws/chat');

socket.onmessage = (event) => {

const message = event.data;

if (message === '~done~' || message === '~error~') {

if (message === '~error~') {

appendSystemMessage('Something went wrong, please try again.');

}

currentSystemMessageElem = undefined;

disableChat(false);

return;

}

appendSystemMessage(message);

};

}

function init() {

initElems();

initWs();

}

document.addEventListener('DOMContentLoaded', () => {

init();

});

}(window, document));

</script>

</body>

</html>Using a bit of JavaScript, we create a WebSocket connection to the backend and render the streamed response to a new element. The initWs method initializes the WebSocket and subscribes to the onmessage event. This event fires whenever data is received through the WebSocket. If the received data isn’t ~done~ or ~error~, it’s displayed in a new

element as the LLM’s answer appears. The special text ~done~ signals that the LLM has finished streaming, and ~error~ obviously indicates that something went wrong.

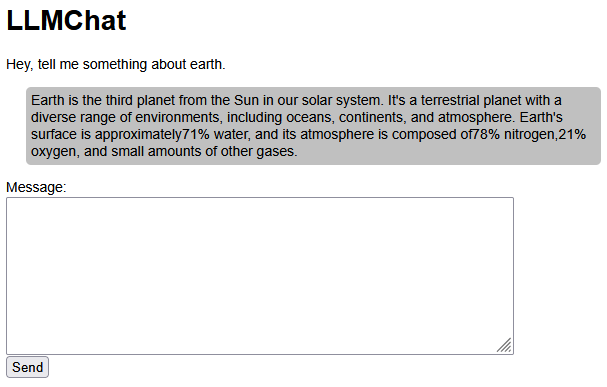

Please note that this is a pretty basic chat UI. It includes some of the core elements of a chat application. Here’s what it should look like:

Handling web sockets

Now that the UI is ready, let’s move on to configuring the WebSocket endpoint. We also need to implement a WebSocket handler, which will be responsible for receiving messages from the client and sending them back.

WsConfig Class

In this class, we configure the endpoint for our WebSocket and specify which WebSocket handler should be used. Here’s what the class looks like:

@Configuration

@EnableWebSocket

public class WsConfig implements WebSocketConfigurer {

private final WsChatHandler wsChatHandler;

public WsConfig(WsChatHandler wsChatHandler) {

this.wsChatHandler = wsChatHandler;

}

@Override

public void registerWebSocketHandlers(WebSocketHandlerRegistry registry) {

registry.addHandler(wsChatHandler, "/ws/chat").setAllowedOrigins("*");

}

}As you can see, we’re attaching the WebSocket handler to the /ws/chat endpoint, meaning the absolute URL will be http://localhost:8080/ws/chat. We’re also specifying with setAllowedOrigins("*") that the endpoint can be accessed from any domain. Please, never do this in production.

You’ll probably get some errors indicating that WsChatHandler doesn’t exist. That’s what we’ll add next.

WsChatHandler class

@Component

public class WsChatHandler extends TextWebSocketHandler {

private static final Logger LOG = LoggerFactory.getLogger(WsChatHandler.class);

private final ChatService chatService;

public WsChatHandler(ChatService chatService) {

this.chatService = chatService;

}

@Override

protected void handleTextMessage(WebSocketSession session, TextMessage message) throws Exception {

chatService.sendMessage(message.getPayload(), new WsClientChatResponse(session));

}

static class WsClientChatResponse implements IClientChatResponse {

private final WebSocketSession session;

public WsClientChatResponse(WebSocketSession session) {

this.session = session;

}

@Override

public void send(String message) {

try {

session.sendMessage(new TextMessage(message));

} catch (IOException e) {

LOG.error("Something went wrong while sending the message.", e);

}

}

}

}Inside the handleTextMessage method, you’ll see that we’ll eventually be calling the chat service (which we’re about to implement) and passing through the message we received from the client. You’ll also notice the WsClientChatResponse inner class. This is a simple adapter class that helps us avoid tight coupling between our WebSocket classes and the ChatService and its underlying code. If you ever decide to use something other than WebSockets, you can just implement a new adapter, and that’s all it takes.

IClientChatResponse interface

This is the adapter. Nothing fancy. See my previous article Adapter Pattern in Java: Quick Example for more information on the adapter pattern.

public interface IClientChatResponse {

void send(String message);

}The chat service

In the chat service, we could perform some additional processing before sending the message to the LLM. In our case, we’re simply passing it to the chat repository and handling any exceptions that might be thrown by the repository. Currently, we’re not handling the exception, we’re just re-throwing it.

The chat service.

@Service

public class ChatService {

public final IChatRepo chatRepo;

public ChatService(IChatRepo chatRepo) {

this.chatRepo = chatRepo;

}

public void sendMessage(String message, IClientChatResponse chatResponse) {

try {

chatRepo.chat(message, chatResponse);

} catch (RepoException e) {

throw new ServiceException("An error occurred while processing the message.", e);

}

}

}The re-thrown exception.

public class ServiceException extends RuntimeException {

public ServiceException(String message, Throwable cause) {

super(message, cause);

}

}The chat repository

The chat repository eventually sends the message to Ollama and retrieves the response. In our case, we’re going to stream the response, which means we’re sending the data to the client as we receive it from Ollama.

First, let’s create the interface.

public interface IChatRepo {

void chat(String message, IClientChatResponse chatResponse) throws RepoException;

}Then the implementation.

@Repository

public class OllamaStreamingChatRepo implements IChatRepo {

private final StreamingChatLanguageModel model;

private final MessageWindowChatMemory memory;

public OllamaStreamingChatRepo() {

model = OllamaStreamingChatModel.builder()

.baseUrl("http://localhost:11434")

.modelName("llama3.1:8b")

.temperature(0.2)

.logRequests(true)

.logResponses(true)

.build();

memory = MessageWindowChatMemory.withMaxMessages(20);

memory.add(SystemMessage.from(

"""

You are a helpful assistant. You take any questions and answer them to the best of your knowledge. Your answers

should be around 50 words.

"""

));

}

@Override

public void chat(String message, IClientChatResponse chatResponse) throws RepoException {

memory.add(UserMessage.from(message));

model.chat(

memory.messages(),

new ChatResponseHandler(chatResponse, memory)

);

}

static class ChatResponseHandler implements StreamingChatResponseHandler {

private final IClientChatResponse clientChatResponse;

private final ChatMemory memory;

public ChatResponseHandler(IClientChatResponse clientChatResponse, ChatMemory memory) {

this.clientChatResponse = clientChatResponse;

this.memory = memory;

}

@Override

public void onPartialResponse(String s) {

clientChatResponse.send(s);

}

@Override

public void onCompleteResponse(ChatResponse chatResponse) {

memory.add(chatResponse.aiMessage());

this.clientChatResponse.send("~done~");

}

@Override

public void onError(Throwable throwable) {

throwable.printStackTrace();

this.clientChatResponse.send("~error~");

}

}

}To stream the response, we’ll use the StreamChatLanguageModel class to communicate with Ollama. We set this up in the constructor, specifying Ollama’s address, the model to use, and the model’s temperature. The temperature parameter controls how creative the model is. A value of 0.0 means it’s not very creative, while 1.0 means it’s quite imaginative.

To help the LLM ‘remember’ what we’ve said (the context), we also need to provide a memory for our chat. We create this memory using MessageWindowChatMemory. This memory holds up to 20 messages, clearing the oldest ones first (like a First In, First Out queue). The chat memory holds three types of messages: SystemMessage, UserMessage, and an LLM response (ChatMessage). The LLM needs all three to have the full context of the conversation.

The SystemMessage essentially tells the LLM how to behave, it’s the personality of the chat, if you will. This SystemMessage is never cleared from the chat memory and is the first message we add when setting up the memory in the constructor. In the OllamaStreamingChatRepo.chat method, we create user messages and add them to the chat memory. A UserMessage is simply what you communicate to the LLM. Eventually, we call the model.chat method, feeding it the memory we’ve created. The LLM will start responding almost immediately.

To handle the streamed responses and send them back to the client, we need a chat response handler. We’re extending the StreamingResponseHandler for this. In the onPartialResponse method, we call the IClientChatResponse adapter (which we created earlier) to send the messages we’re receiving from the LLM back to the client. In the onCompleteResponse method, we add the complete LLM response (ChatMessage) to the chat memory, expanding the context with the latest response, and send a message to the client indicating that the LLM is finished. In the onError method, we have some basic error handling, we just let the client know something went wrong.

That’s really the core of it. Now, run the Spring Boot application and see if it all works! If not, retrace these steps to figure out what might have gone wrong.

Conclusion and final thoughts

As we’ve seen, building a simple chat using Ollama, LangChain4J, and Spring Boot is relatively straightforward. However, it’s important to remember that this is a basic implementation. To keep things simple, we’re currently storing the chat memory directly in the repository, and it’s only instantiated once when Spring Boot starts. This means the memory is shared among all users. If you’re planning to support multiple users, you’ll probably want to move the memory to a separate object that you can associate with each WebSocket session.

The MessageWindowChatMemory is great for prototyping, but it’s not ideal for real-world use. It keeps everything in memory, so eventually, you’ll want to persist that memory somewhere so you can access those chats later. Check out the official docs for more info. Also, MessageWindowChatMemory focuses on the number of messages processed. If you want to focus on the number of tokens processed instead, it can’t do that. You’ll need TokenWindowChatMemory for that. TokenWindowChatMemory requires a tokenizer to count tokens. Tokenizers can vary depending on the LLM, so you’ll need to find one that works with your model.